IBM PowerNPTM

NP2G

Network Processor

Preliminary

February 12, 2003

0.1 Copyright and Disclaimer

Copyright International Business Machines Corporation 2003

All Rights Reserved

US Government Users Restricted Rights - Use, duplication or disclosure restricted by GSA ADP Schedule Contract with IBM Corp.

Printed in the United States of America February 2003

The following are trademarks of International Business Machines Corporation in the United States, or other countries, or

both.

IBM

IBM Logo

PowerPC

PowerNP

IEEE and IEEE 802 are registered trademarks of IEEE in all cases.

Other company, product and service names may be trademarks or service marks of others.

All information contained in this document is subject to change without notice. The products described in this document

are NOT intended for use in applications such as implantation, life support, or other hazardous uses where malfunction

could result in death, bodily injury, or catastrophic property damage. The information contained in this document does not

affect or change IBM product specifications or warranties. Nothing in this document shall operate as an express or implied

license or indemnity under the intellectual property rights of IBM or third parties. All information contained in this docu-

ment was obtained in specific environments, and is presented as an illustration. The results obtained in other operating

environments may vary.

While the information contained herein is believed to be accurate, such information is preliminary, and should not be

relied upon for accuracy or completeness, and no representations or warranties of accuracy or completeness are made.

THE INFORMATION CONTAINED IN THIS DOCUMENT IS PROVIDED ON AN "AS IS" BASIS. In no event will IBM be

liable for damages arising directly or indirectly from any use of the information contained in this document.

IBM Microelectronics Division

2070 Route 52, Bldg. 330

Hopewell Junction, NY 12533-6351

The IBM home page can be found at

http://www.ibm.com

The IBM Microelectronics Division home page

can be found at http://www.ibm.com/chips

np2_ds_title.fm.01

February 12, 2003

Note: This document contains information on products in the sampling and/or initial production phases of

development. This information is subject to change without notice. Verify with your IBM field applications engi-

neer that you have the latest version of this document before finalizing a design.

IBM

PowerNP

NP2G

Preliminary

Network Processor

np2_ds_TOC.fm.01

February 12, 2003

Contents

Page 3 of 539

Contents

About This Book ........................................................................................................... 25

Who Should Read This Manual ........................................................................................................... 25

Related Publications ............................................................................................................................ 25

Conventions Used in This Manual ....................................................................................................... 25

1. General Information .................................................................................................. 27

1.1 Features .......................................................................................................................................... 27

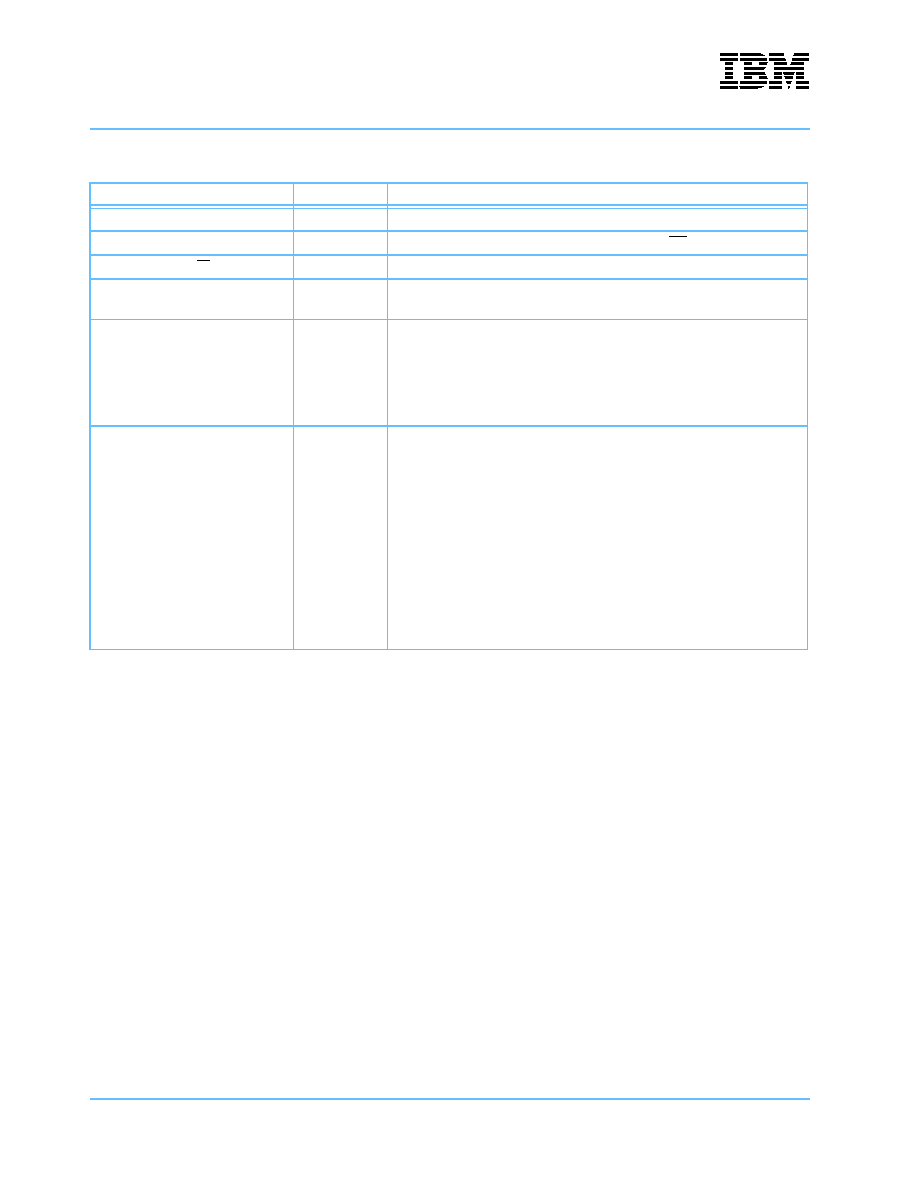

1.2 Ordering Information ....................................................................................................................... 28

1.3 Overview ......................................................................................................................................... 29

1.4 NP2G-Based Systems .................................................................................................................... 29

1.5 Structure .......................................................................................................................................... 31

1.5.1 EPC Structure ........................................................................................................................ 32

1.5.1.1 Coprocessors ................................................................................................................. 33

1.5.1.2 Enhanced Threads ......................................................................................................... 33

1.5.1.3 Hardware Accelerators ................................................................................................... 34

1.5.2 NP2G Memory ....................................................................................................................... 34

1.6 Data Flow ........................................................................................................................................ 35

1.6.1 Basic Data Flow ..................................................................................................................... 35

1.6.2 Data Flow in the EPC ............................................................................................................ 36

2. Physical Description ................................................................................................. 39

2.1 Pin Information ................................................................................................................................ 40

2.1.1 Ingress-to-Egress Wrap (IEW) Pins ...................................................................................... 41

2.1.2 Flow Control Interface Pins ................................................................................................... 41

2.1.3 ZBT Interface Pins ................................................................................................................. 42

2.1.4 DDR DRAM Interface Pins .................................................................................................... 45

2.1.4.1 D3, D2, and D0 Interface Pins ........................................................................................ 51

2.1.4.2 D4_0 and D4_1 Interface Pins ....................................................................................... 53

2.1.4.3 D6_x Interface Pins ........................................................................................................ 54

2.1.4.4 DS1 and DS0 Interface Pins .......................................................................................... 55

2.1.5 PMM Interface Pins ............................................................................................................... 56

2.1.5.1 TBI Bus Pins ................................................................................................................... 59

2.1.5.2 GMII Bus Pins ................................................................................................................ 63

2.1.5.3 SMII Bus Pins ................................................................................................................. 65

2.1.6 PCI Pins ................................................................................................................................. 71

2.1.7 Management Bus Interface Pins ........................................................................................... 73

2.1.8 Miscellaneous Pins ................................................................................................................ 75

2.1.9 PLL Filter Circuit .................................................................................................................... 80

2.1.10 Thermal I/O Usage .............................................................................................................. 80

2.1.10.1 Temperature Calculation .............................................................................................. 81

2.1.10.2 Measurement Calibration ............................................................................................. 81

2.2 Clocking Domains ........................................................................................................................... 82

2.3 Mechanical Specifications ............................................................................................................... 84

2.4 IEEE 1149 (JTAG) Compliance ...................................................................................................... 86

2.4.1 Statement of JTAG Compliance ............................................................................................ 86

2.4.2 JTAG Compliance Mode ....................................................................................................... 86

IBM PowerNP NP2G

Network Processor

Preliminary

Contents

Page 4 of 539

np2_ds_TOC.fm.01

February 12, 2003

2.4.3 JTAG Implementation Specifics ............................................................................................. 86

2.4.4 Brief Overview of JTAG Instructions ...................................................................................... 87

2.4 Signal Pin Lists ................................................................................................................................ 86

3. Physical MAC Multiplexer ....................................................................................... 109

3.1 Ethernet Overview ......................................................................................................................... 110

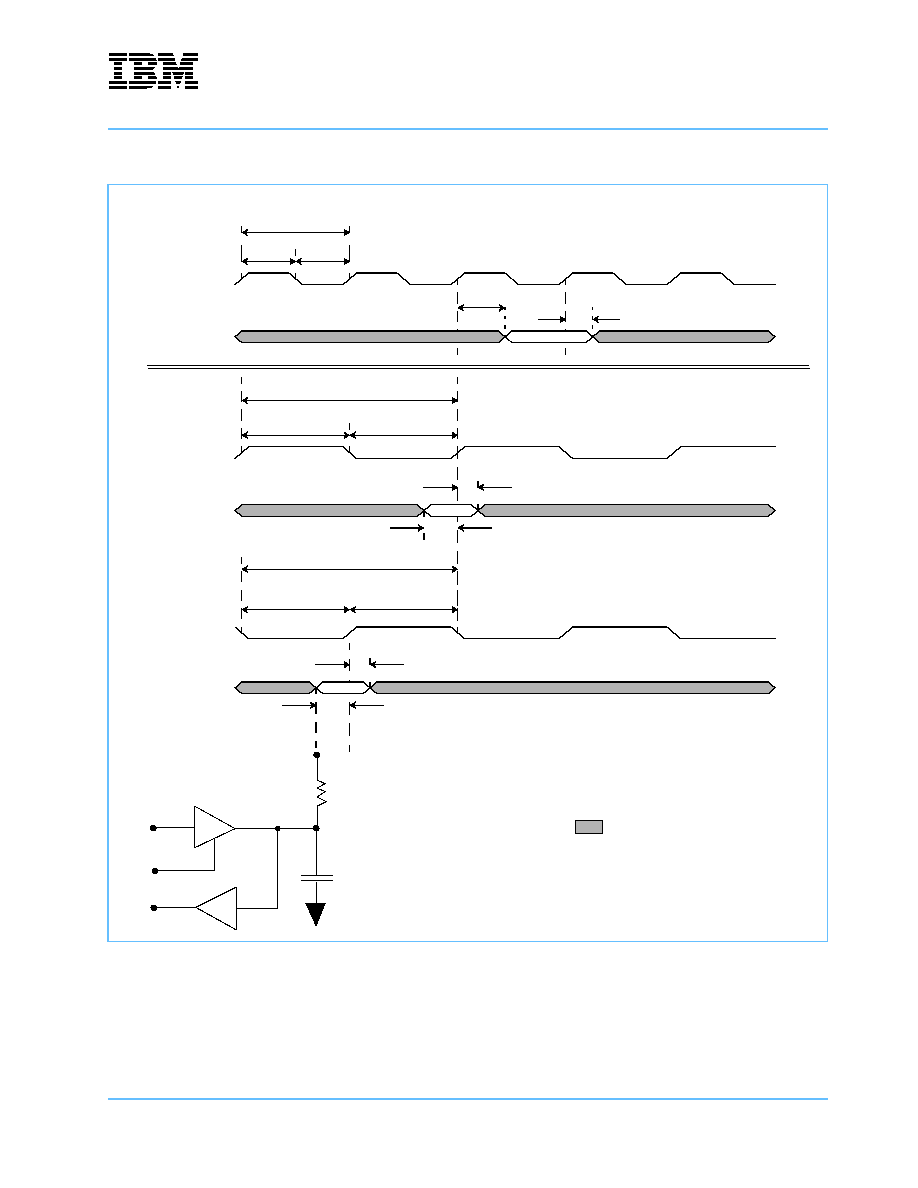

3.1.1 Ethernet Interface Timing Diagrams .................................................................................... 110

3.1.2 Ethernet Counters ................................................................................................................ 112

3.1.3 Ethernet Support .................................................................................................................. 117

3.2 POS Overview ............................................................................................................................... 118

3.2.1 POS Timing Diagrams ......................................................................................................... 119

3.2.2 POS Counters ...................................................................................................................... 122

3.2.3 POS Support ........................................................................................................................ 126

4. Ingress Enqueuer / Dequeuer / Scheduler ............................................................ 127

4.1 Overview ....................................................................................................................................... 127

4.2 Operation ....................................................................................................................................... 128

4.2.1 Operational Details .............................................................................................................. 130

4.3 Ingress Flow Control ..................................................................................................................... 132

4.3.1 Flow Control Hardware Facilities ......................................................................................... 132

4.3.2 Hardware Function ............................................................................................................... 134

4.3.2.1 Exponentially Weighted Moving Average (EWMA) ...................................................... 134

4.3.2.2 Flow Control Hardware Actions .................................................................................... 134

5. Ingress-to-Egress Wrap .......................................................................................... 135

5.1 Ingress Cell Data Mover ................................................................................................................ 135

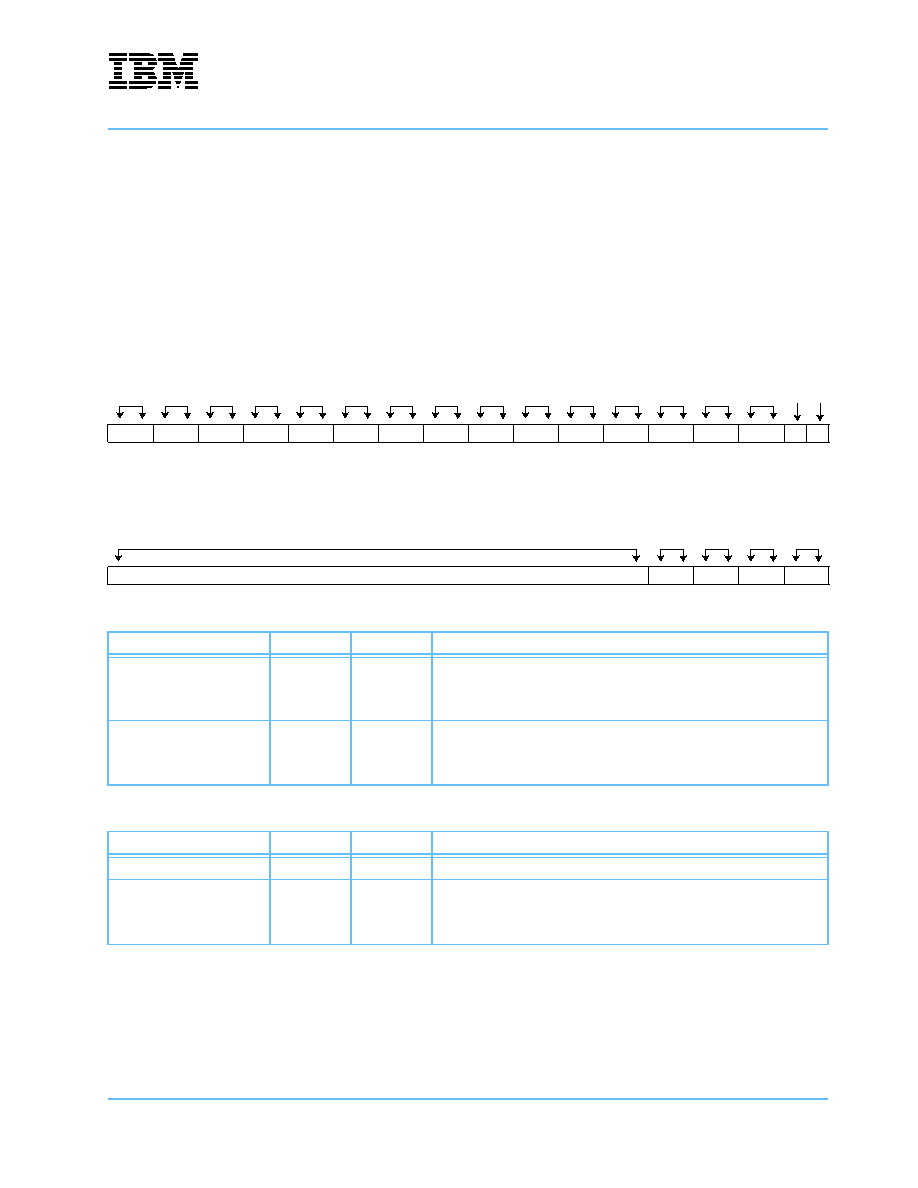

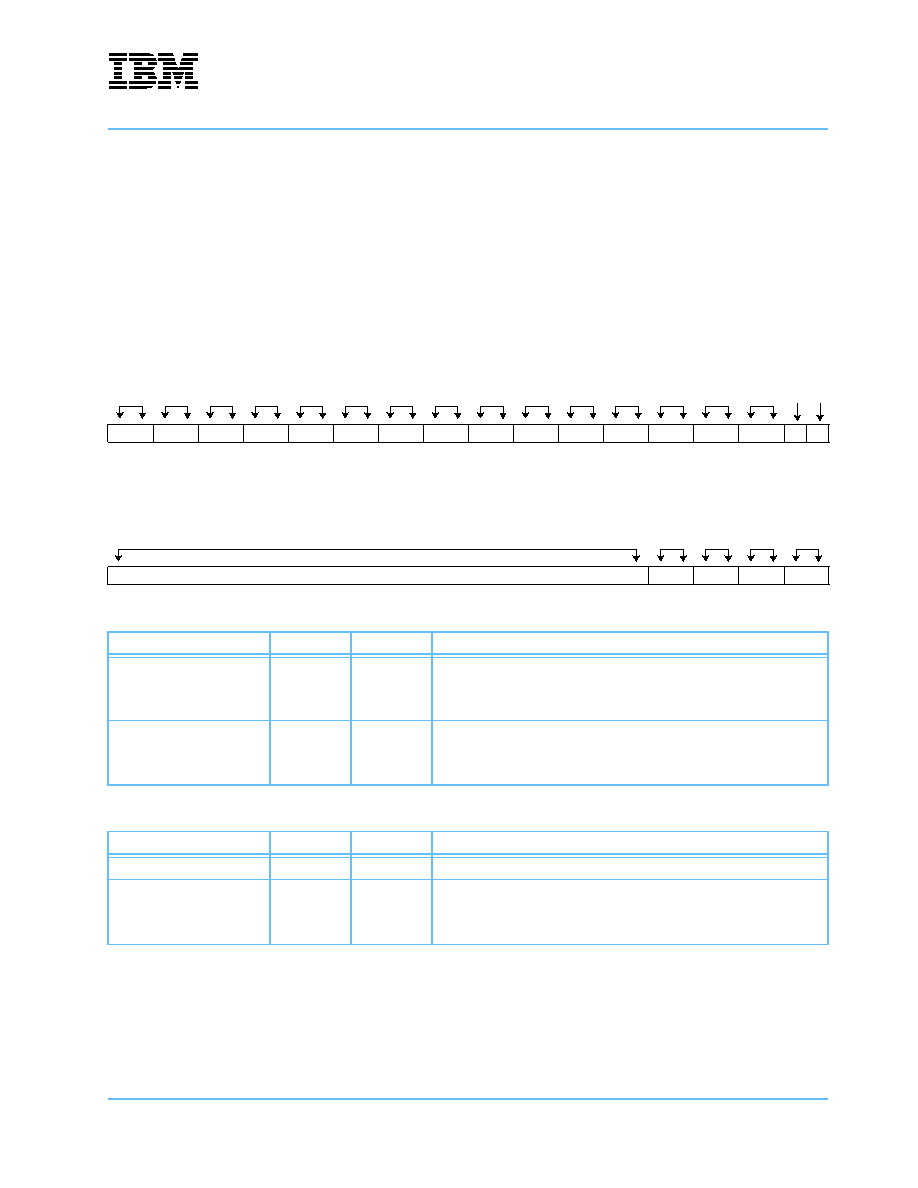

5.1.1 Cell Header .......................................................................................................................... 136

5.1.2 Frame Header ...................................................................................................................... 138

5.2 Ingress Cell Interface .................................................................................................................... 139

5.3 Egress Cell Interface ..................................................................................................................... 140

5.4 Egress Cell Data Mover ................................................................................................................ 140

6. Egress Enqueuer / Dequeuer / Scheduler ............................................................. 141

6.1 Functional Blocks .......................................................................................................................... 142

6.2 Operation ....................................................................................................................................... 144

6.3 Egress Flow Control ...................................................................................................................... 147

6.3.1 Flow Control Hardware Facilities ......................................................................................... 147

6.3.2 Remote Egress Status Bus .................................................................................................. 149

6.3.2.1 Bus Sequence and Timing ........................................................................................... 149

6.3.2.2 Configuration ................................................................................................................ 150

6.3.3 Hardware Function ............................................................................................................... 151

6.3.3.1 Exponentially Weighted Moving Average ..................................................................... 151

6.3.3.2 Flow Control Hardware Actions .................................................................................... 151

6.4 The Egress Scheduler ................................................................................................................... 152

6.4.1 Egress Scheduler Components ........................................................................................... 154

6.4.1.1 Scheduling Calendars .................................................................................................. 154

6.4.1.2 Flow Queues ................................................................................................................ 155

IBM

PowerNP

NP2G

Preliminary

Network Processor

np2_ds_TOC.fm.01

February 12, 2003

Contents

Page 5 of 539

6.4.1.3 Target Port Queues ...................................................................................................... 157

6.4.2 Configuring Flow Queues .................................................................................................... 158

6.4.2.1 Additional Configuration Notes ..................................................................................... 158

6.4.3 Scheduler Accuracy and Capacity ....................................................................................... 158

7. Embedded Processor Complex ............................................................................. 161

7.1 Overview ....................................................................................................................................... 161

7.1.1 Thread Types ...................................................................................................................... 165

7.2 Dyadic Protocol Processor Unit (DPPU) ....................................................................................... 166

7.2.1 Core Language Processor (CLP) ........................................................................................ 167

7.2.1.1 Core Language Processor Address Map ..................................................................... 169

7.2.2 CLP Opcode Formats .......................................................................................................... 171

7.2.3 DPPU Coprocessors ........................................................................................................... 171

7.2.4 Shared Memory Pool ........................................................................................................... 172

7.3 CLP Opcode Formats ................................................................................................................... 173

7.3.1 Control Opcodes .................................................................................................................. 173

7.3.1.1 Nop Opcode ................................................................................................................. 174

7.3.1.2 Exit Opcode .................................................................................................................. 174

7.3.1.3 Test and Branch Opcode ............................................................................................. 174

7.3.1.4 Branch and Link Opcode .............................................................................................. 175

7.3.1.5 Return Opcode ............................................................................................................. 176

7.3.1.6 Branch Register Opcode .............................................................................................. 176

7.3.1.7 Branch PC Relative Opcode ........................................................................................ 176

7.3.1.8 Branch Reg+Off Opcode .............................................................................................. 177

7.3.2 Data Movement Opcodes .................................................................................................... 178

7.3.2.1 Memory Indirect Opcode .............................................................................................. 182

7.3.2.2 Memory Address Indirect Opcode ................................................................................ 183

7.3.2.3 Memory Direct Opcode ................................................................................................ 184

7.3.2.4 Scalar Access Opcode ................................................................................................. 184

7.3.2.5 Scalar Immediate Opcode ............................................................................................ 185

7.3.2.6 Transfer Quadword Opcode ......................................................................................... 185

7.3.2.7 Zero Array Opcode ....................................................................................................... 186

7.3.3 Coprocessor Execution Opcodes ........................................................................................ 187

7.3.3.1 Execute Direct Opcode ................................................................................................ 188

7.3.3.2 Execute Indirect Opcode .............................................................................................. 189

7.3.3.3 Execute Direct Conditional Opcode ............................................................................. 189

7.3.3.4 Execute Indirect Conditional Opcode ........................................................................... 190

7.3.3.5 Wait Opcode ................................................................................................................. 190

7.3.3.6 Wait and Branch Opcode ............................................................................................. 191

7.3.4 ALU Opcodes ...................................................................................................................... 192

7.3.4.1 Arithmetic Immediate Opcode ...................................................................................... 192

7.3.4.2 Logical Immediate Opcode ........................................................................................... 195

7.3.4.3 Compare Immediate Opcode ....................................................................................... 195

7.3.4.4 Load Immediate Opcode .............................................................................................. 196

7.3.4.5 Arithmetic Register Opcode ......................................................................................... 199

7.3.4.6 Count Leading Zeros Opcode ...................................................................................... 201

7.4 DPPU Coprocessors ..................................................................................................................... 202

7.4.1 Tree Search Engine Coprocessor ....................................................................................... 203

7.4.2 Data Store Coprocessor ...................................................................................................... 203

7.4.2.1 Data Store Coprocessor Address Map ......................................................................... 204

IBM PowerNP NP2G

Network Processor

Preliminary

Contents

Page 6 of 539

np2_ds_TOC.fm.01

February 12, 2003

7.4.2.2 Data Store Coprocessor Commands ............................................................................ 210

7.4.3 Control Access Bus (CAB) Coprocessor ............................................................................. 220

7.4.3.1 CAB Coprocessor Address Map ................................................................................... 220

7.4.3.2 CAB Access to NP2G Structures ................................................................................. 221

7.4.3.3 CAB Coprocessor Commands ..................................................................................... 222

7.4.4 Enqueue Coprocessor ......................................................................................................... 223

7.4.4.1 Enqueue Coprocessor Address Map ............................................................................ 224

7.4.4.2 Enqueue Coprocessor Commands .............................................................................. 235

7.4.5 Checksum Coprocessor ....................................................................................................... 241

7.4.5.1 Checksum Coprocessor Address Map ......................................................................... 241

7.4.5.2 Checksum Coprocessor Commands ............................................................................ 242

7.4.6 String Copy Coprocessor ..................................................................................................... 246

7.4.6.1 String Copy Coprocessor Address Map ....................................................................... 246

7.4.6.2 String Copy Coprocessor Commands .......................................................................... 246

7.4.7 Policy Coprocessor .............................................................................................................. 247

7.4.7.1 Policy Coprocessor Address Map ................................................................................ 247

7.4.7.2 Policy Coprocessor Commands ................................................................................... 247

7.4.8 Counter Coprocessor ........................................................................................................... 248

7.4.8.1 Counter Coprocessor Address Map ............................................................................. 248

7.4.8.2 Counter Coprocessor Commands ................................................................................ 249

7.4.9 Coprocessor Response Bus ................................................................................................ 252

7.4.9.1 Coprocessor Response Bus Address Map ................................................................... 252

7.4.9.2 Coprocessor Response Bus Commands ..................................................................... 252

7.4.9.3 14-bit Coprocessor Response Bus ............................................................................... 253

7.4.10 Semaphore Coprocessor ................................................................................................... 253

7.4.10.1 Semaphore Coprocessor Commands ........................................................................ 253

7.4.10.2 Error Conditions .......................................................................................................... 255

7.4.10.3 Software Use Models ................................................................................................. 256

7.5 Interrupts and Timers .................................................................................................................... 257

7.5.1 Interrupts .............................................................................................................................. 257

7.5.1.1 Interrupt Vector Registers ............................................................................................. 257

7.5.1.2 Interrupt Mask Registers .............................................................................................. 257

7.5.1.3 Interrupt Target Registers ............................................................................................. 257

7.5.1.4 Software Interrupt Registers ......................................................................................... 257

7.5.2 Timers .................................................................................................................................. 258

7.5.2.1 Timer Interrupt Counters .............................................................................................. 258

7.6 Dispatch Unit ................................................................................................................................. 259

7.6.1 Port Configuration Memory .................................................................................................. 261

7.6.1.1 Port Configuration Memory Index Definition ................................................................. 261

7.6.2 Port Configuration Memory Contents Definition ................................................................... 262

7.6.3 Completion Unit ................................................................................................................... 263

7.7 Hardware Classifier ....................................................................................................................... 264

7.7.1 Ingress Classification ........................................................................................................... 264

7.7.1.1 Ingress Classification Input ........................................................................................... 264

7.7.1.2 Ingress Classification Output ........................................................................................ 265

7.7.2 Egress Classification ............................................................................................................ 269

7.7.2.1 Egress Classification Input ........................................................................................... 269

7.7.2.2 Egress Classification Output ........................................................................................ 270

7.7.3 Completion Unit Label Generation ....................................................................................... 271

7.8 Policy Manager .............................................................................................................................. 272

IBM

PowerNP

NP2G

Preliminary

Network Processor

np2_ds_TOC.fm.01

February 12, 2003

Contents

Page 7 of 539

7.9 Counter Manager .......................................................................................................................... 275

7.9.1 Counter Manager Usage ..................................................................................................... 277

7.10 Semaphore Manager .................................................................................................................. 283

8. Tree Search Engine ................................................................................................ 285

8.1 Overview ....................................................................................................................................... 285

8.1.1 Addressing Control Store (CS) ............................................................................................ 285

8.1.2 D6 Control Store. ................................................................................................................. 286

8.1.3 Logical Memory Views of D6 ............................................................................................... 286

8.1.4 Control Store Use Restrictions ............................................................................................ 287

8.1.5 Object Shapes ..................................................................................................................... 288

8.1.6 Illegal Memory Access ......................................................................................................... 290

8.1.7 Memory Range Checking (Address Bounds Check) ........................................................... 291

8.2 Trees and Tree Searches ............................................................................................................. 292

8.2.1 Input Key and Color Register for FM and LPM Trees ......................................................... 293

8.2.2 Input Key and Color Register for SMT Trees ...................................................................... 293

8.2.3 Direct Table ......................................................................................................................... 294

8.2.3.1 Pattern Search Control Blocks (PSCB) ........................................................................ 294

8.2.3.2 Leaves and Compare-at-End Operation ...................................................................... 295

8.2.3.3 Cascade/Cache ............................................................................................................ 295

8.2.3.4 Cache Flag and NrPSCBs Registers ........................................................................... 295

8.2.3.5 Cache Management ..................................................................................................... 295

8.2.3.6 Search Output .............................................................................................................. 296

8.2.4 Tree Search Algorithms ....................................................................................................... 296

8.2.4.1 FM Trees ...................................................................................................................... 296

8.2.4.2 LPM Trees .................................................................................................................... 297

8.2.4.3 LPM PSCB Structure in Memory .................................................................................. 297

8.2.4.4 LPM Compact PSCB Support ...................................................................................... 298

8.2.4.5 LPM Trees with Multibit Compare ................................................................................ 300

8.2.4.6 SMT Trees .................................................................................................................... 303

8.2.4.7 Compare-at-End Operation .......................................................................................... 304

8.2.4.8 Ropes ........................................................................................................................... 305

8.2.4.9 Aging ............................................................................................................................ 306

8.2.5 Tree Configuration and Initialization .................................................................................... 307

8.2.5.1 The LUDefTable ........................................................................................................... 307

8.2.5.2 TSE Free Lists (TSE_FL) ............................................................................................. 310

8.2.6 TSE Registers and Register Map ........................................................................................ 311

8.2.7 TSE Instructions .................................................................................................................. 316

8.2.7.1 FM Tree Search (TS_FM) ............................................................................................ 317

8.2.7.2 LPM Tree Search (TS_LPM) ........................................................................................ 318

8.2.7.3 SMT Tree Search (TS_SMT) ....................................................................................... 320

8.2.7.4 Memory Read (MRD) ................................................................................................... 321

8.2.7.5 Memory Write (MWR) ................................................................................................... 322

8.2.7.6 Hash Key (HK) ............................................................................................................. 323

8.2.7.7 Read LUDefTable (RDLUDEF) .................................................................................... 324

8.2.7.8 Compare-at-End (COMPEND) ..................................................................................... 326

8.2.7.9 Distinguish Position for Fast Table Update (DISTPOS_GDH) ..................................... 327

8.2.7.10 Read PSCB for Fast Table Update (RDPSCB_GDH) ................................................ 328

8.2.7.11 Write PSCB for Fast Table Update (WRPSCB_GDH) ............................................... 330

8.2.7.12 SetPatBit_GDH .......................................................................................................... 331

IBM PowerNP NP2G

Network Processor

Preliminary

Contents

Page 8 of 539

np2_ds_TOC.fm.01

February 12, 2003

8.2.8 GTH Hardware Assist Instructions ....................................................................................... 333

8.2.8.1 Hash Key GTH (HK_GTH) ........................................................................................... 334

8.2.8.2 Read LUDefTable GTH (RDLUDEF GTH) ................................................................... 334

8.2.8.3 Tree Search Enqueue Free List (TSENQFL) ............................................................... 335

8.2.8.4 Tree Search Dequeue Free List (TSDQFL) .................................................................. 336

8.2.8.5 Read Current Leaf from Rope (RCLR) ......................................................................... 336

8.2.8.6 Advance Rope with Optional Delete Leaf (ARDL) ........................................................ 337

8.2.8.7 Tree Leaf Insert Rope (TLIR) ....................................................................................... 338

8.2.8.8 Clear PSCB (CLRPSCB) .............................................................................................. 338

8.2.8.9 Read PSCB (RDPSCB) ................................................................................................ 339

8.2.8.10 Write PSCB (WRPSCB) ............................................................................................. 339

8.2.8.11 Push PSCB (PUSHPSCB) ......................................................................................... 340

8.2.8.12 Distinguish (DISTPOS) ............................................................................................... 340

8.2.8.13 TSR0 Pattern (TSR0PAT) .......................................................................................... 341

8.2.8.14 Pattern 2DTA (PAT2DTA) .......................................................................................... 342

8.2.9 Hash Functions .................................................................................................................... 342

9. Serial / Parallel Manager Interface ......................................................................... 351

9.1 SPM Interface Components .......................................................................................................... 351

9.2 SPM Interface Data Flow .............................................................................................................. 352

9.3 SPM Interface Protocol ................................................................................................................. 354

9.4 SPM CAB Address Space ............................................................................................................. 356

9.4.1 Byte Access Space .............................................................................................................. 356

9.4.2 Word Access Space ............................................................................................................. 356

9.4.3 EEPROM Access Space ...................................................................................................... 357

9.4.3.1 EEPROM Single-Byte Access ...................................................................................... 357

9.4.3.2 EEPROM 2-Byte Access .............................................................................................. 358

9.4.3.3 EEPROM 3-Byte Access .............................................................................................. 358

9.4.3.4 EEPROM 4-Byte Access .............................................................................................. 359

10. Embedded PowerPCTM Subsystem ...................................................................... 361

10.1 Description .................................................................................................................................. 361

10.2 Processor Local Bus and Device Control Register Buses .......................................................... 362

10.2.1 Processor Local Bus (PLB) ................................................................................................ 362

10.2.2 Device Control Register (DCR) Bus ................................................................................... 363

10.3 PLB Address Map ....................................................................................................................... 364

10.4 CAB Address Map ....................................................................................................................... 366

10.5 Universal Interrupt Controller (UIC) Macro .................................................................................. 367

10.6 PCI/PLB Bridge Macro ................................................................................................................ 368

10.7 CAB Interface Macro ................................................................................................................... 372

10.7.1 PowerPC CAB Address (PwrPC_CAB_Addr) Register ..................................................... 374

10.7.2 PowerPC CAB Data (PwrPC_CAB_Data) Register ........................................................... 374

10.7.3 PowerPC CAB Control (PwrPC_CAB_Cntl) Register ........................................................ 375

10.7.4 PowerPC CAB Status (PwrPC_CAB_Status) Register ...................................................... 375

10.7.5 PowerPC CAB Mask (PwrPC_CAB_Mask) Register ......................................................... 376

10.7.6 PowerPC CAB Write Under Mask Data (PwrPC_CAB_WUM_Data) ................................ 377

10.7.7 PCI Host CAB Address (Host_CAB_Addr) Register .......................................................... 377

10.7.8 PCI Host CAB Data (Host_CAB_Data) Register ............................................................... 378

10.7.9 PCI Host CAB Control (Host_CAB_Cntl) Register ............................................................. 378

IBM

PowerNP

NP2G

Preliminary

Network Processor

np2_ds_TOC.fm.01

February 12, 2003

Contents

Page 9 of 539

10.7.10 PCI Host CAB Status (Host_CAB_Status) Register ........................................................ 379

10.7.11 PCI Host CAB Mask (Host_CAB_Mask) Register ........................................................... 379

10.7.12 PCI Host CAB Write Under Mask Data (Host_CAB_WUM_Data) Register .................... 380

10.8 Mailbox Communications and DRAM Interface Macro ............................................................... 381

10.8.1 Mailbox Communications Between PCI Host and PowerPC Subsystem .......................... 382

10.8.2 PCI Interrupt Status (PCI_Interr_Status) Register ............................................................. 384

10.8.3 PCI Interrupt Enable (PCI_Interr_Ena) Register ............................................................... 385

10.8.4 PowerPC Subsystem to PCI Host Message Resource (P2H_Msg_Resource) Register .. 386

10.8.5 PowerPC Subsystem to Host Message Address (P2H_Msg_Addr) Register ................... 386

10.8.6 PowerPC Subsystem to Host Doorbell (P2H_Doorbell) Register ...................................... 387

10.8.7 Host to PowerPC Subsystem Message Address (H2P_Msg_Addr) Register ................... 388

10.8.8 Host to PowerPC Subsystem Doorbell (H2P_Doorbell) Register ...................................... 389

10.8.9 Mailbox Communications Between PowerPC Subsystem and EPC ................................. 390

10.8.10 EPC to PowerPC Subsystem Resource (E2P_Msg_Resource) Register ....................... 391

10.8.11 EPC to PowerPC Subsystem Message Address (E2P_Msg_Addr) Register ................. 392

10.8.12 EPC to PowerPC Subsystem Doorbell (E2P_Doorbell) Register .................................... 393

10.8.13 EPC Interrupt Vector Register ......................................................................................... 394

10.8.14 EPC Interrupt Mask Register ........................................................................................... 394

10.8.15 PowerPC Subsystem to EPC Message Address (P2E_Msg_Addr) Register ................. 395

10.8.16 PowerPC Subsystem to EPC Doorbell (P2E_Doorbell) Register .................................... 396

10.8.17 Mailbox Communications Between PCI Host and EPC ................................................... 397

10.8.18 EPC to PCI Host Resource (E2H_Msg_Resource) Register .......................................... 398

10.8.19 EPC to PCI Host Message Address (E2H_Msg_Addr) Register ..................................... 399

10.8.20 EPC to PCI Host Doorbell (E2H_Doorbell) Register ....................................................... 400

10.8.21 PCI Host to EPC Message Address (H2E_Msg_Addr) Register ..................................... 402

10.8.22 PCI Host to EPC Doorbell (H2E_Doorbell) Register ....................................................... 403

10.8.23 Message Status (Msg_Status) Register .......................................................................... 404

10.8.24 PowerPC Boot Redirection Instruction Registers (Boot_Redir_Inst) ............................... 406

10.8.25 PowerPC Machine Check (PwrPC_Mach_Chk) Register ............................................... 407

10.8.26 Parity Error Status and Reporting .................................................................................... 407

10.8.27 Slave Error Address Register (SEAR) ............................................................................. 408

10.8.28 Slave Error Status Register (SESR) ................................................................................ 409

10.8.29 Parity Error Counter (Perr_Count) Register .................................................................... 410

10.9 System Start-Up and Initialization ............................................................................................... 411

10.9.1 NP2G Resets ..................................................................................................................... 411

10.9.2 Systems Initialized by External PCI Host Processors ....................................................... 412

10.9.3 Systems with PCI Host Processors and Initialized by PowerPC Subsystem .................... 413

10.9.4 Systems Without PCI Host Processors and Initialized by PowerPC Subsystem .............. 414

10.9.5 Systems Without PCI Host or Delayed PCI Configuration and Initialized by EPC ............ 415

11. Reset and Initialization ......................................................................................... 417

11.1 Overview ..................................................................................................................................... 417

11.2 Step 1: Set I/Os ........................................................................................................................... 419

11.3 Step 2: Reset the NP2G .............................................................................................................. 420

11.4 Step 3: Boot ................................................................................................................................ 421

11.4.1 Boot the Embedded Processor Complex (EPC) ................................................................ 421

11.4.2 Boot the PowerPC ............................................................................................................. 421

11.4.3 Boot Summary ................................................................................................................... 421

11.5 Step 4: Setup 1 ........................................................................................................................... 422

IBM PowerNP NP2G

Network Processor

Preliminary

Contents

Page 10 of 539

np2_ds_TOC.fm.01

February 12, 2003

11.6 Step 5: Diagnostics 1 .................................................................................................................. 423

11.7 Step 6: Setup 2 ............................................................................................................................ 424

11.8 Step 7: Hardware Initialization ..................................................................................................... 425

11.9 Step 8: Diagnostics 2 .................................................................................................................. 425

11.10 Step 9: Operational ................................................................................................................... 426

11.11 Step 10: Configure .................................................................................................................... 426

11.12 Step 11: Initialization Complete ................................................................................................. 427

12. Debug Facilities ..................................................................................................... 429

12.1 Debugging Picoprocessors ......................................................................................................... 429

12.1.1 Single Step ......................................................................................................................... 429

12.1.2 Break Points ....................................................................................................................... 429

12.1.3 CAB Accessible Registers ................................................................................................. 429

12.2 RISCWatch .................................................................................................................................. 430

13. Configuration ......................................................................................................... 431

13.1 Memory Configuration ................................................................................................................. 431

13.1.1 Memory Configuration Register (Memory_Config) ............................................................ 432

13.1.2 DRAM Parameter Register (DRAM_Parm) ....................................................................... 434

13.1.3 Delay Calibration Registers ............................................................................................... 439

13.2 Toggle Mode Register ................................................................................................................. 444

13.3 BCI Format Register .................................................................................................................... 444

13.3.1 Toggle Mode ...................................................................................................................... 446

13.3.1.1 DSU information ......................................................................................................... 446

13.3.1.2 ENQE Command and Qclass 5 or 6 ........................................................................... 447

13.4 Egress Reassembly Sequence Check Register (E_Reassembly_Seq_Ck) ............................... 448

13.5 Aborted Frame Reassembly Action Control Register (AFRAC) ................................................. 448

13.6 Packing Registers ....................................................................................................................... 449

13.6.1 Packing Control Register (Pack_Ctrl) ................................................................................ 449

13.6.2 Packing Delay Register (Pack_Dly) ................................................................................... 450

13.7 Initialization Control Registers ..................................................................................................... 451

13.7.1 Initialization Register (Init) ................................................................................................. 451

13.7.2 Initialization Done Register (Init_Done) ............................................................................ 452

13.8 NP2G Ready Register (NPR_Ready) ........................................................................................ 453

13.9 Phase-Locked Loop Registers .................................................................................................... 454

13.9.1 Phase-Locked Loop Fail Register (PLL_Lock_Fail) ........................................................... 454

13.10 Software Controlled Reset Register (Soft_Reset) .................................................................... 455

13.11 Ingress Free Queue Threshold Configuration ........................................................................... 455

13.11.1 BCB_FQ Threshold Registers ......................................................................................... 455

13.11.2 BCB_FQ Threshold for Guided Traffic (BCB_FQ_Th_GT) .............................................. 455

13.11.3 BCB_FQ_Threshold_0 Register (BCB_FQ_TH_0) .......................................................... 456

13.11.4 BCB_FQ_Threshold_1 Register (BCB_FQ_TH_1) .......................................................... 457

13.11.5 BCB_FQ_Threshold_2 Register (BCB_FQ_Th_2) .......................................................... 457

13.12 I-GDQ Threshold Register (I-GDQ_Th) ..................................................................................... 458

13.13 Ingress Target DMU Data Storage Map Register (I_TDMU_DSU) .......................................... 459

13.14 Embedded Processor Complex Configuration .......................................................................... 460

13.14.1 PowerPC Core Reset Register (PowerPC_Reset) .......................................................... 460

13.14.2 PowerPC Boot Redirection Instruction Registers (Boot_Redir_Inst) ............................... 461

IBM

PowerNP

NP2G

Preliminary

Network Processor

np2_ds_TOC.fm.01

February 12, 2003

Contents

Page 11 of 539

13.14.3 Watch Dog Reset Enable Register (WD_Reset_Ena) ..................................................... 462

13.14.4 Boot Override Register (Boot_Override) ......................................................................... 463

13.14.5 Thread Enable Register (Thread_Enable) ...................................................................... 464

13.14.6 GFH Data Disable Register (GFH_Data_Dis) ................................................................. 464

13.14.7 Ingress Maximum DCB Entries (I_Max_DCB) ................................................................. 465

13.14.8 Egress Maximum DCB Entries (E_Max_DCB) ................................................................ 465

13.14.9 Ordered Semaphore Enable Register (Ordered_Sem_Ena) ........................................... 466

13.14.10 Enhanced Classification Enable Register (Enh_HWC_Ena) ......................................... 467

13.15 Flow Control Structures ............................................................................................................. 468

13.15.1 Ingress Flow Control Hardware Structures ...................................................................... 468

13.15.1.1 Ingress Transmit Probability Memory Register (I_Tx_Prob_Mem) .......................... 468

13.15.1.2 Ingress pseudorandom Number Register (I_Rand_Num) ........................................ 469

13.15.1.3 Free Queue Thresholds Register (FQ_Th) .............................................................. 469

13.15.2 Egress Flow Control Structures ....................................................................................... 470

13.15.2.1 Egress Transmit Probability Memory (E_Tx_Prob_Mem) Register .......................... 470

13.15.2.2 Egress pseudorandom Number (E_Rand_Num) ..................................................... 471

13.15.2.3 P0 Twin Count Threshold (P0_Twin_Th) ................................................................. 471

13.15.2.4 P1 Twin Count Threshold (P1_Twin_Th) ................................................................. 471

13.15.2.5 Egress P0 Twin Count EWMA Threshold Register (E_P0_Twin_EWMA_Th) ......... 472

13.15.2.6 Egress P1 Twin Count EWMA Threshold Register (E_P1_Twin_EWMA_Th) ......... 472

13.15.3 Exponentially Weighted Moving Average Constant (K) Register (EWMA_K) ................. 473

13.15.4 Exponentially Weighted Moving Average Sample Period (T) Register (EWMA_T) ......... 474

13.15.5 Flow Control Force Discard Register (FC_Force_Discard) ............................................. 475

13.16 Egress CDM Stack Threshold Register (E_CDM_Stack_Th) .................................................. 476

13.17 Free Queue Extended Stack Maximum Size (FQ_ES_Max) Register ..................................... 477

13.18 Egress Free Queue Thresholds ................................................................................................ 478

13.18.1 FQ_ES_Threshold_0 Register (FQ_ES_Th_0) .............................................................. 478

13.18.2 FQ_ES_Threshold_1 Register (FQ_ES_Th_1) .............................................................. 479

13.18.3 FQ_ES_Threshold_2 Register (FQ_ES_Th_2) .............................................................. 479

13.19 Egress Frame Data Queue Thresholds (E_GRx_GBx_th) ....................................................... 480

13.20 Discard Flow QCB Register (Discard_QCB) ............................................................................. 481

13.21 Bandwidth Allocation Register (BW_Alloc_Reg) ....................................................................... 482

13.22 Miscellaneous Controls Register (MISC_CNTRL) .................................................................... 483

13.23 Frame Control Block FQ Size Register (FCB_FQ_Max) .......................................................... 484

13.24 Data Mover Unit (DMU) Configuration Registers ...................................................................... 485

13.25 Frame Pad Configuration Register (DMU_Pad) ........................................................................ 489

13.26 Ethernet Jumbo Frame Size Register (EN_Jumbo_FS) ........................................................... 489

13.27 QD Accuracy Register (QD_Acc) .............................................................................................. 490

13.28 Packet Over SONET Control Register (POS_Ctrl) ................................................................... 491

13.28.1 Initial CRC Value Determination ...................................................................................... 493

13.29 Packet Over SONET Maximum Frame Size (POS_Max_FS) ................................................... 495

13.30 Ethernet Encapsulation Type Register for Control (E_Type_C) ............................................... 496

13.31 Ethernet Encapsulation Type Register for Data (E_Type_D) ................................................... 496

13.32 Source Address Array (SA_Array) ........................................................................................... 497

13.33 Destination Address Array (DA_Array) ..................................................................................... 498

13.34 Programmable I/O Register (PIO_Reg) .................................................................................... 499

13.35 Ingress-to-Egress Wrap Configuration Registers ...................................................................... 500

13.35.1 IEW Configuration 1 Register (IEW_Config1) ................................................................. 500

IBM PowerNP NP2G

Network Processor

Preliminary

Contents

Page 12 of 539

np2_ds_TOC.fm.01

February 12, 2003

13.35.2 IEW Configuration 2 Register (IEW_Config2) .................................................................. 500

13.35.3 IEW Initialization Register (IEW_Init) ............................................................................... 501

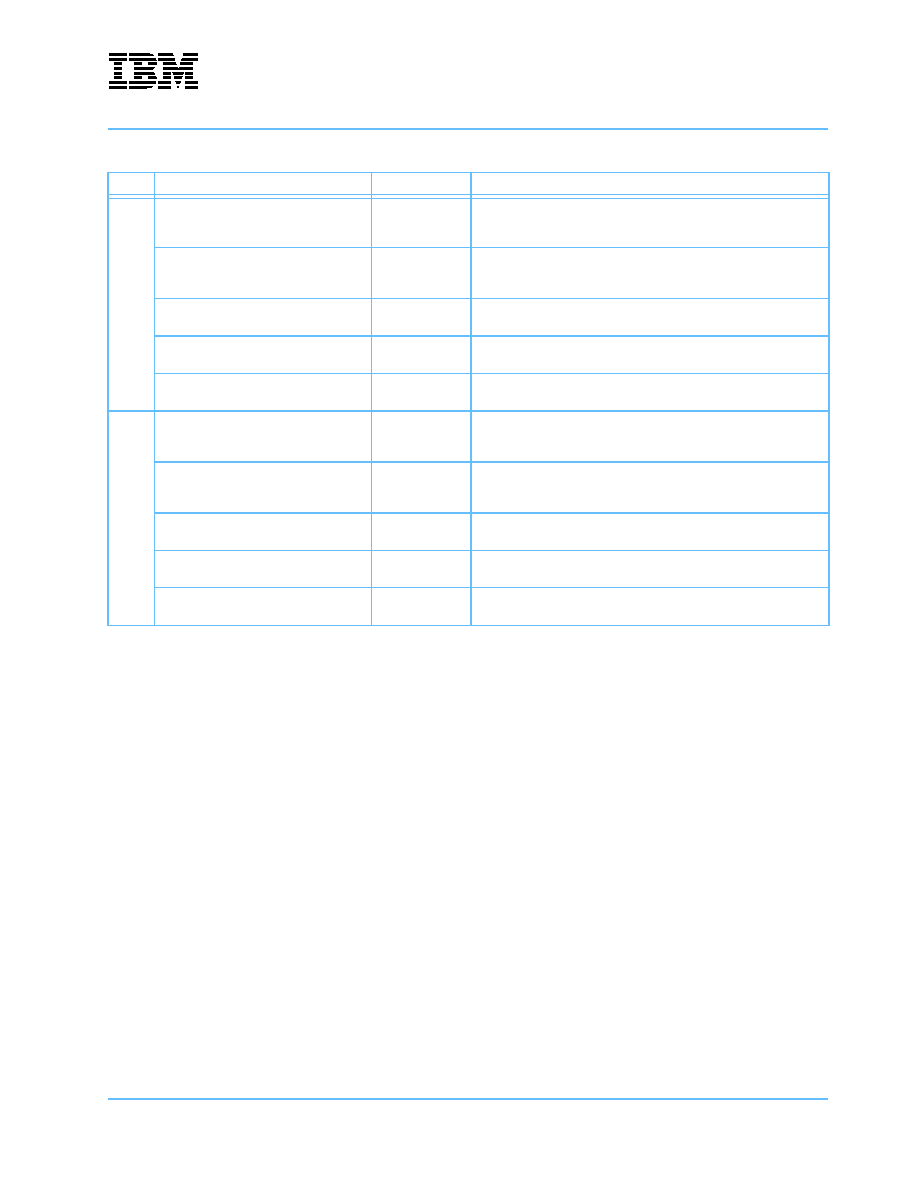

14. Electrical and Thermal Specifications ................................................................. 503

14.1 Driver Specifications .................................................................................................................... 522

14.2 Receiver Specifications ............................................................................................................... 524

14.3 Other Driver and Receiver Specifications ................................................................................... 526

15. Glossary of Terms and Abbreviations ................................................................. 529

Revision Log ................................................................................................................ 539

IBM

PowerNP

NP2G

Preliminary

Network Processor

np2_ds_LOT.fm.01

February 12, 2003

Page 13 of 539

List of Tables

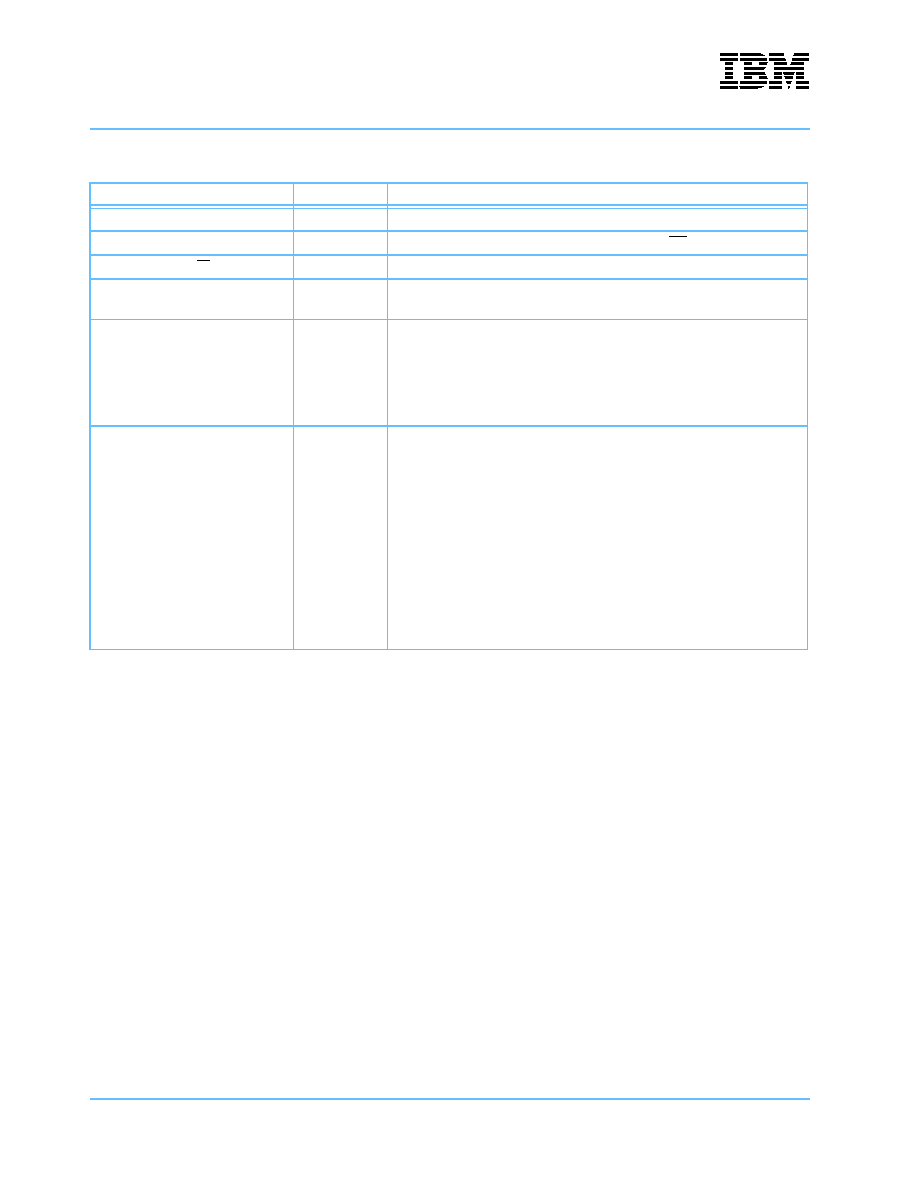

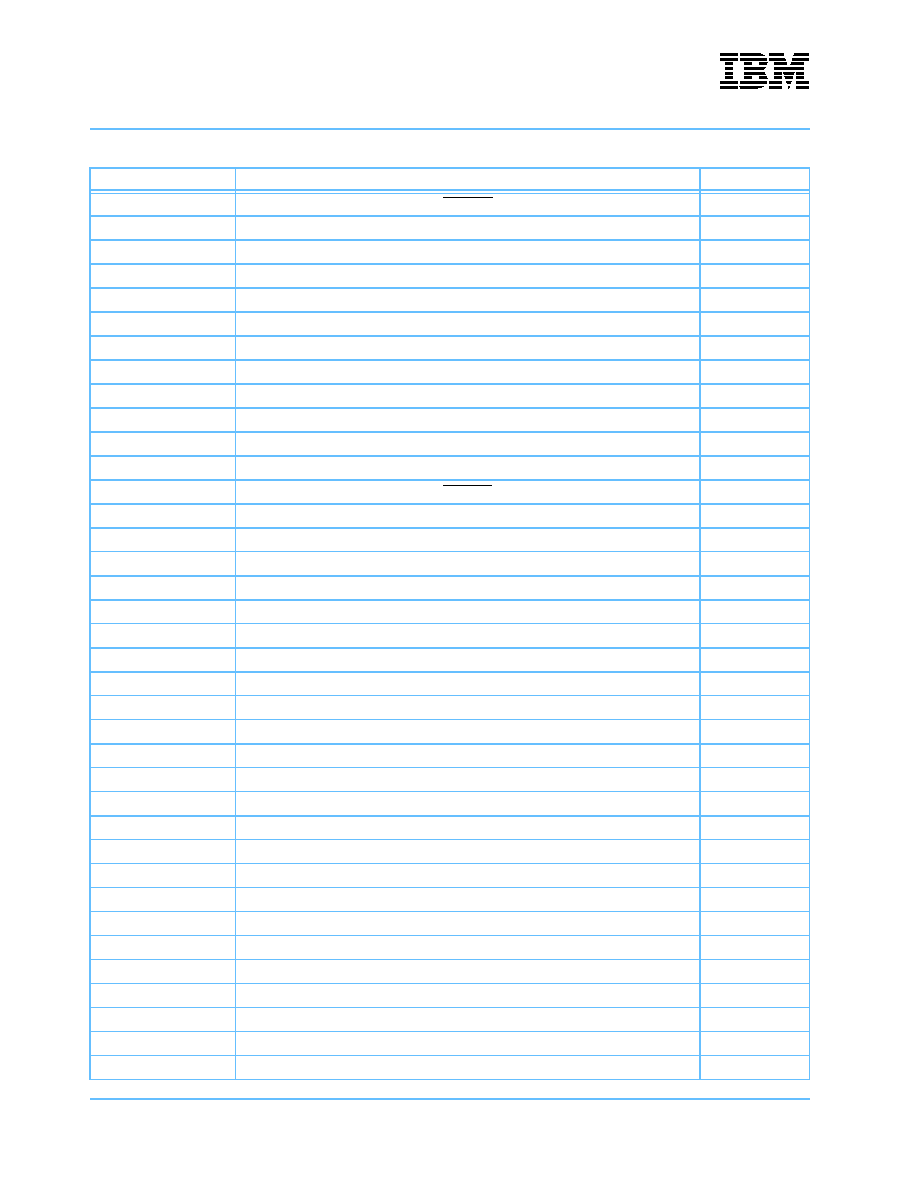

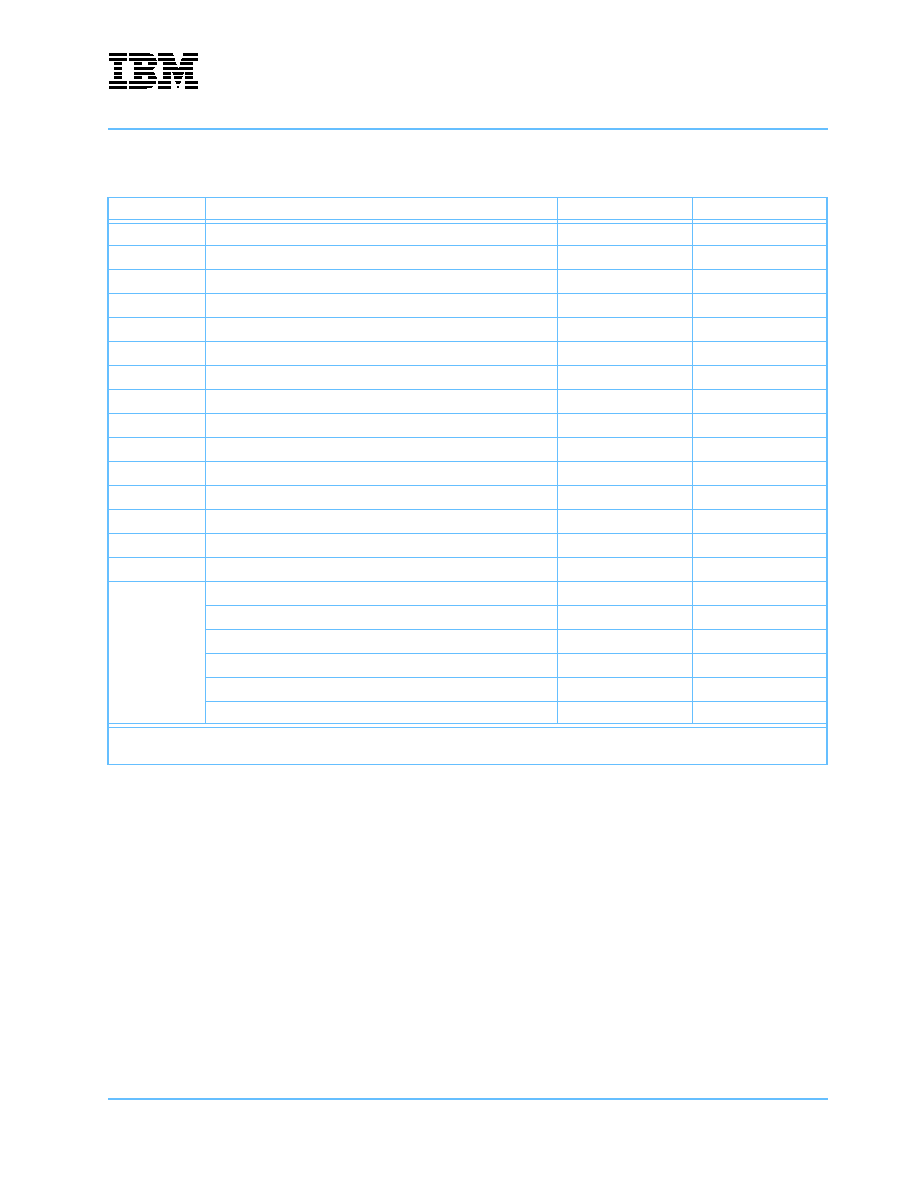

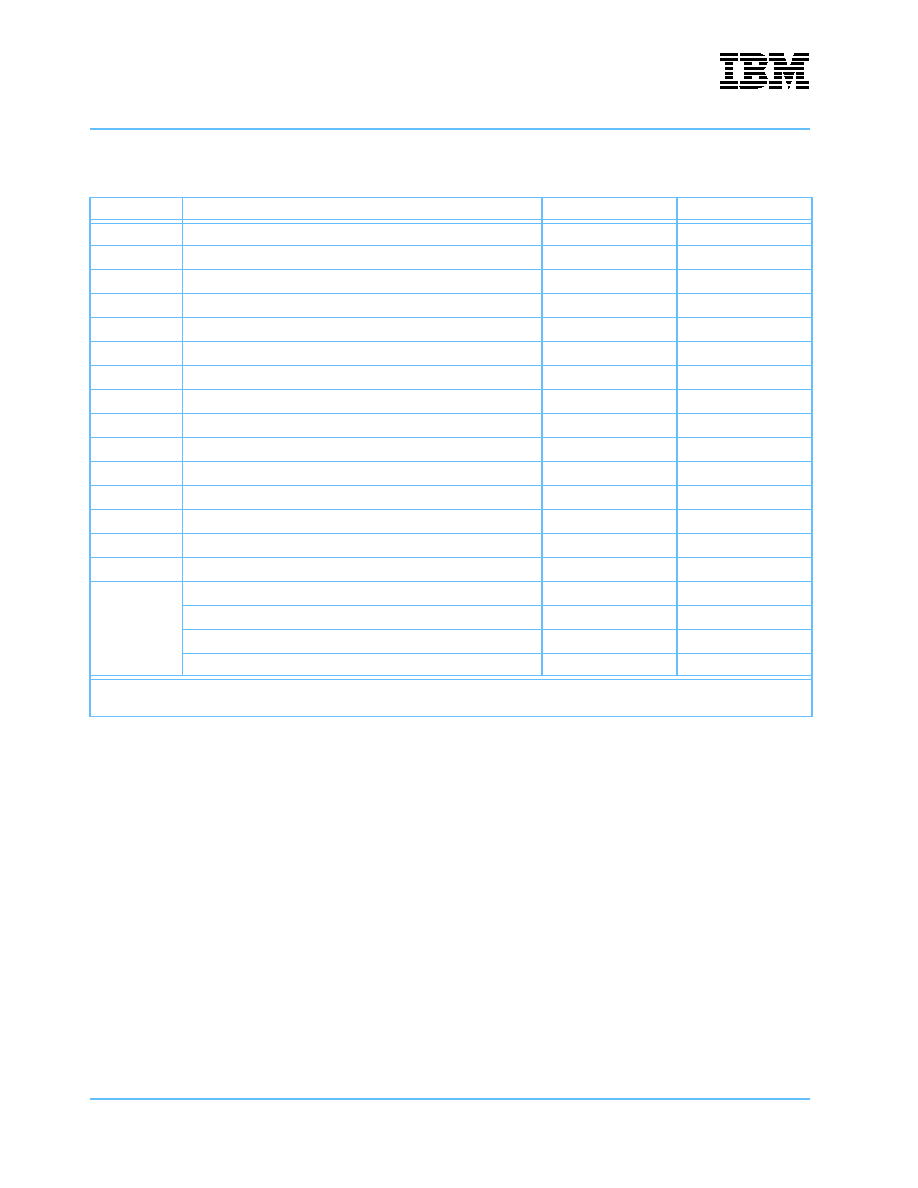

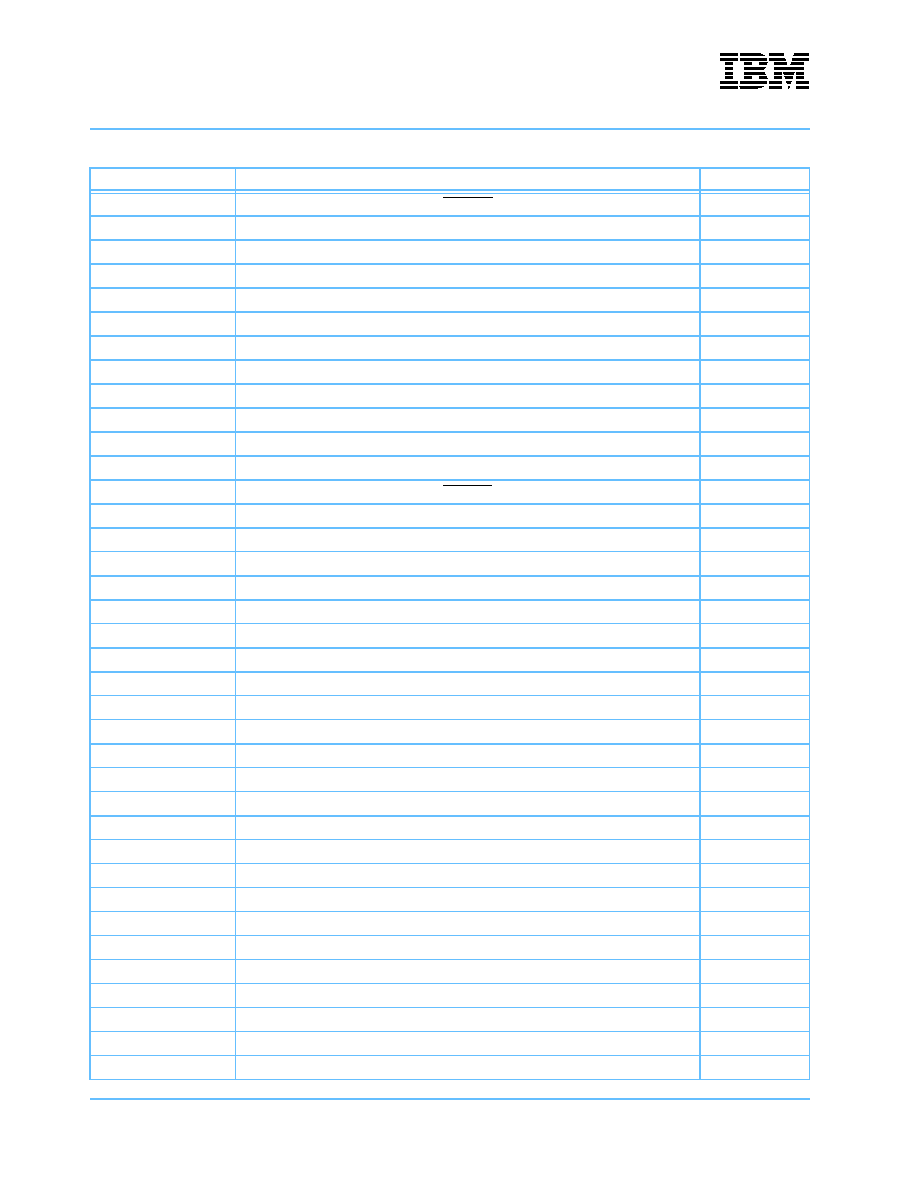

Table 2-1. Signal Pin Functions .................................................................................................................... 40

Table 2-2. Ingress-to-Egress Wrap (IEW) Pins ............................................................................................ 41

Table 2-3. Flow Control Pins ........................................................................................................................ 41

Table 2-4. Z0 ZBT SRAM Interface Pins ...................................................................................................... 42

Table 2-5. Z1 ZBT SRAM Interface Pins ...................................................................................................... 42

Table 2-6. ZBT SRAM Timing Diagram Legend (for Figure 2-2) .................................................................. 44

Table 2-7. DDR Timing Diagram Legend (for Figure 2-3, Figure 2-4, and Figure 2-5) ................................ 48

Table 2-8. DDR Timing Diagram Legend (for Figure 2-3, Figure 2-4, and Figure 2-5) ................................ 49

Table 2-9. DDR Timing Diagram Legend (for Figure 2-3, Figure 2-4, and Figure 2-5) ................................ 50

Table 2-10. D3 and D2 Interface Pins .......................................................................................................... 51

Table 2-11. D0 Memory Pins ........................................................................................................................ 52

Table 2-12. D4_0 and D4_1 Interface Pins .................................................................................................. 53

Table 2-13. D6_5, D6_4, D6_3, D6_2, D6_1, and D6_0 Memory Pins ........................................................ 54

Table 2-14. DS1 and DS0 Interface Pins ..................................................................................................... 55

Table 2-15. PMM Interface Pins ................................................................................................................... 56

Table 2-16. PMM Interface Pin Multiplexing ................................................................................................. 57

Table 2-17. PMM Interface Pins: Debug (DMU_D Only) .............................................................................. 59

Table 2-18. Parallel Data Bit to 8B/10B Position Mapping (TBI Interface) ................................................... 59

Table 2-19. PMM Interface Pins: TBI Mode ................................................................................................. 59

Table 2-20. TBI Timing Diagram Legend (for Figure 2-8) ............................................................................ 62

Table 2-21. PMM Interface Pins: GMII Mode ............................................................................................... 63

Table 2-22. GMII Timing Diagram Legend (for Figure 2-9) .......................................................................... 65

Table 2-23. PMM Interface Pins: SMII Mode ................................................................................................ 65

Table 2-24. SMII Timing Diagram Legend (for Figure 2-10) ......................................................................... 66

Table 2-25. POS Signals .............................................................................................................................. 67

Table 2-26. POS Timing Diagram Legend (for Figure 2-11 and Figure 2-12) .............................................. 70

Table 2-27. PCI Pins .................................................................................................................................... 71

Table 2-28. PCI Timing Diagram Legend (for Figure 2-13) .......................................................................... 73

Table 2-29. Management Bus Pins .............................................................................................................. 73

Table 2-30. SPM Bus Timing Diagram Legend (for Figure 2-14) ................................................................. 74

Table 2-31. Miscellaneous Pins .................................................................................................................... 75

Table 2-32. Signals Requiring Pull-Up or Pull-Down .................................................................................... 77

Table 2-33. Pins Requiring Connections to Other Pins ................................................................................ 78

Table 2-34. Alternate Wiring for Pins Requiring Connections to Other Pins ................................................ 79

Table 2-35. Mechanical Specifications ......................................................................................................... 85

Table 2-36. JTAG Compliance-Enable Inputs .............................................................................................. 86

Table 2-37. Implemented JTAG Public Instructions ..................................................................................... 86

Table 2-38. Complete Signal Pin Listing by Signal Name ............................................................................ 88

IBM PowerNP NP2G

Network Processor

Preliminary

Page 14 of 539

np2_ds_LOT.fm.01

February 12, 2003

Table 2-39. Complete Signal Pin Listing by Grid Position ............................................................................98

Table 3-1. Ingress Ethernet Counters .........................................................................................................112

Table 3-2. Egress Ethernet Counters .........................................................................................................114

Table 3-3. Ethernet Support ........................................................................................................................117

Table 3-4. DMU and Framer Configurations ...............................................................................................118

Table 3-5. Receive Counter RAM Addresses for Ingress POS MAC .........................................................122

Table 3-6. Transmit Counter RAM Addresses for Egress POS MAC .........................................................124

Table 3-7. POS Support ..............................................................................................................................126

Table 4-1. Flow Control Hardware Facilities ...............................................................................................133

Table 5-1. Cell Header Fields .....................................................................................................................137

Table 5-2. Frame Header Fields .................................................................................................................139

Table 6-1. Flow Control Hardware Facilities ...............................................................................................148

Table 6-2. Flow Queue Parameters ............................................................................................................152

Table 6-3. Valid Combinations of Scheduler Parameters ...........................................................................152

Table 6-4. Configure a Flow QCB ...............................................................................................................158

Table 7-1. Core Language Processor Address Map ...................................................................................169

Table 7-2. Shared Memory Pool .................................................................................................................172

Table 7-3. Condition Codes (Cond Field) ...................................................................................................173

Table 7-4. AluOp Field Definition ................................................................................................................193

Table 7-5. Lop Field Definition ....................................................................................................................195

Table 7-6. Arithmetic Opcode Functions .....................................................................................................199

Table 7-7. Coprocessor Instruction Format ................................................................................................203

Table 7-8. Data Store Coprocessor Address Map ......................................................................................204

Table 7-9. Ingress DataPool Byte Address Definitions ...............................................................................206

Table 7-10. Egress Frames DataPool Quadword Addresses .....................................................................208

Table 7-11. DataPool Byte Addressing with Cell Header Skip ...................................................................209

Table 7-12. Number of Frame-bytes in the DataPool .................................................................................210

Table 7-13. WREDS Input ..........................................................................................................................212

Table 7-14. WREDS Output ........................................................................................................................212

Table 7-15. RDEDS Input ...........................................................................................................................213

Table 7-16. RDEDS Output ........................................................................................................................214

Table 7-17. WRIDS Input ............................................................................................................................214

Table 7-18. WRIDS Output .........................................................................................................................214

Table 7-19. RDIDS Input .............................................................................................................................215

Table 7-20. RDIDS Output ..........................................................................................................................215

Table 7-21. RDMOREE Input .....................................................................................................................216

Table 7-22. RDMOREE Output ...................................................................................................................216

Table 7-23. RDMOREI Input .......................................................................................................................217

Table 7-24. RDMOREI Output ....................................................................................................................217

IBM

PowerNP

NP2G

Preliminary

Network Processor

np2_ds_LOT.fm.01

February 12, 2003

Page 15 of 539

Table 7-25. LEASETWIN Output ................................................................................................................ 218

Table 7-26. EDIRTY Inputs ........................................................................................................................ 218

Table 7-27. EDIRTY Output ....................................................................................................................... 219

Table 7-28. IDIRTY Inputs .......................................................................................................................... 220

Table 7-29. IDIRTY Output ......................................................................................................................... 220

Table 7-30. CAB Coprocessor Address Map ............................................................................................. 220

Table 7-31. CAB Address Field Definitions ................................................................................................ 221

Table 7-32. CAB Address, Functional Island Encoding .............................................................................. 221

Table 7-33. CABARB Input ......................................................................................................................... 222

Table 7-34. CABACCESS Input ................................................................................................................. 223

Table 7-35. CABACCESS Output .............................................................................................................. 223

Table 7-36. Enqueue Coprocessor Address Map ...................................................................................... 224

Table 7-37. Ingress FCBPage Description ................................................................................................. 225

Table 7-38. Egress FCBPage Description .................................................................................................. 229

Table 7-39. ENQE Target Queues ............................................................................................................. 236

Table 7-40. Egress Target Queue Selection Coding .................................................................................. 236

Table 7-41. Egress Target Queue Parameters .......................................................................................... 237

Table 7-42. Type Field for Discard Queue ................................................................................................. 237

Table 7-43. ENQE Command Input ............................................................................................................ 237

Table 7-44. Egress Queue Class Definitions .............................................................................................. 238

Table 7-45. ENQI Target Queues ............................................................................................................... 238

Table 7-46. Ingress Target Queue Selection Coding ................................................................................. 239

Table 7-47. Ingress Target Queue FCBPage Parameters ......................................................................... 239

Table 7-48. ENQI Command Input ............................................................................................................. 239

Table 7-49. Ingress-Queue Class Definition ............................................................................................... 240

Table 7-50. ENQCLR Command Input ....................................................................................................... 240

Table 7-51. ENQCLR Output ...................................................................................................................... 240

Table 7-52. RELEASE_LABEL Output ....................................................................................................... 240

Table 7-53. Checksum Coprocessor Address Map .................................................................................... 241

Table 7-54. GENGEN/GENGENX Command Inputs ................................................................................. 243

Table 7-55. GENGEN/GENGENX/GENIP/GENIPX Command Outputs .................................................... 243

Table 7-56. GENIP/GENIPX Command Inputs .......................................................................................... 244

Table 7-57. CHKGEN/CHKGENX Command Inputs .................................................................................. 244

Table 7-58. CHKGEN/CHKGENX/CHKIP/CHKIPX Command Outputs ..................................................... 245

Table 7-59. CHKIP/CHKIPX Command Inputs ........................................................................................... 245

Table 7-60. String Copy Coprocessor Address Map .................................................................................. 246

Table 7-61. StrCopy Command Input ......................................................................................................... 246

Table 7-62. StrCopy Command Output ...................................................................................................... 247

Table 7-63. Policy Coprocessor Address Map ........................................................................................... 247

IBM PowerNP NP2G

Network Processor